Chaos Engineering For Elasticsearch

Introduction

Gremlin is a simple, safe and secure service for performing Chaos Engineering experiments through a SaaS-based platform. Elasticsearch is a search engine based on Apache Lucene. It provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. Datadog is a monitoring service for cloud-scale applications, providing monitoring of servers, databases, tools, and services, through a SaaS-based data analytics platform. Datadog provides an integration to monitor Elasticsearch.

Chaos Engineering Hypothesis

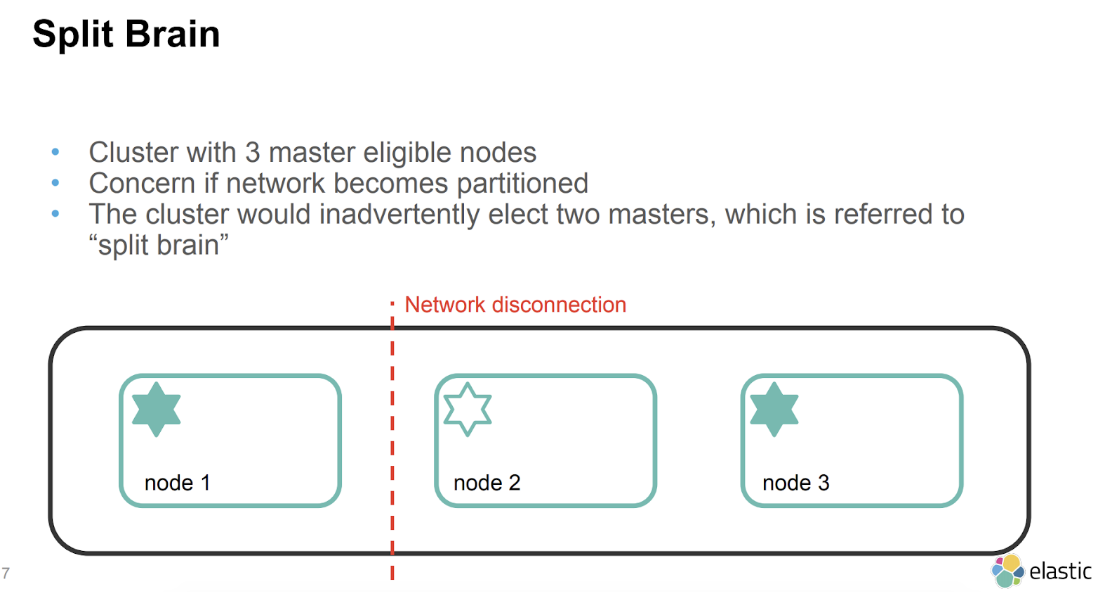

For the purposes of this tutorial we will run Chaos Engineering experiments on the Elasticsearch nodes to reproduce an issue referred to as “split brain”. We will then explain how to avoid “split brain” and run an additional Chaos Engineering experiment to ensure it does not occur. The Chaos Engineering experiment we will perform is a Gremlin Shutdown attack on one Elasticsearch node.

Split brain indicates data inconsistencies originating from separate data sets with overlap in scope, either because of servers in a network design, or a failure condition based on servers not communicating and synchronizing their data to each other. This last case is also commonly referred to as a network partition.

Source: Elasticsearch architecture best practices - by Eric Westberg, Elastic

Prerequisites

To complete this tutorial you will need the following:

- 3 cloud infrastructure hosts running Ubuntu 16.04

- A Gremlin account (sign up here)

- A Datadog account

You will also need to install the following on each of your 3 cloud infrastructure hosts. This will enable you to run your Chaos Engineering experiments.

- Java

- Elasticsearch

- Docker

- Gremlin

- Datadog

Overview

This tutorial will walk you through the required steps to run the Elasticsearch Split Brain Chaos Engineering experiment.

- Step 1 - Setting up a VPN for your Elasticsearch hosts using Ansible

- Step 2 - Installing Java

- Step 3 - Install Elasticsearch on each host

- Step 4 - Installing Docker on each host

- Step 5 - Installing Gremlin in a Docker container on each host

- Step 6 - Installing Datadog in a Docker container on each host

- Step 7 - Running the Elasticsearch Split Brain Chaos Engineering experiment

- Step 8 - Preventing Elasticsearch Split Brain

- Step 9 - Additional Chaos Engineering experiments you can run with Gremlin

Step 1 - Setting up a VPN for your Elasticsearch hosts using Ansible

We will use an Ansible Playbook to automatically create /etc/hosts entries on each host that resolves each VPN server's inventory hostname to its VPN IP address.

First you will need to install Ansible on your local machine, you can use homebrew to do this:

On your local machine, use git clone to download a copy of the Playbook. We'll clone it to our home directory:

Now change to the newly-downloaded ansible-tinc directory:

Before running the Playbook, you must create a hosts file that contains information about the hosts you want to include in your Tinc VPN.

Enter your own vpn configuration in the~/ansible-tinc/hosts file, an example is provided below:

Once your hosts file contains all of the servers you want to include in your VPN, save your changes.

At this point, you should test that Ansible can connect to all of the hosts in your inventory file:

You should see a "SUCCESS" message similar to below:

Before running the Playbook, you may want to review the contents of the /group_vars/all file:

You will see the following:

Next we will set up the VPN across your hosts by running the Playbook.

From the ansible-tinc directory, run this command to run the Playbook:

While the Playbook runs, it should provide the output of each task that is executed. If successful, it will appear as below:

All of the hosts in the inventory file should now be able to communicate with each other over the VPN network.

Step 2 - Installing Java

Install Java 8 on all 3 of your Elasticsearch hosts.

Add the Oracle Java PPA to apt:

Update your apt package database:

Install the latest stable version of Oracle Java 8 with this command (and accept the license agreement that pops up):

Be sure to repeat this step on all of your Elasticsearch servers.

Now that Java 8 is installed, let's install ElasticSearch.

Step 3 - Install Elasticsearch on the host

Elasticsearch can be installed with a package manager by adding Elastic's package source list. Complete this step on all of your Elasticsearch servers.

Run the following command to import the Elasticsearch public GPG key into apt:

Create the Elasticsearch source list:

Update your apt package database:

Install Elasticsearch:

Elasticsearch is now installed but it needs to be configured before you can use it.

Open the Elasticsearch configuration file for editing:

Because our VPN interface is named "tun0" on all of our servers, we will configure all of our servers with the same line:

Note the addition of "_local_", this will allow you to use the Elasticsearch HTTP API locally by sending requests to localhost.

Next, set the name of your cluster

Next, we will set the name of each node.

Next, you will need to configure an initial list of nodes that will be contacted to discover and create a cluster.

Your servers are now configured to form a basic Elasticsearch cluster.

Save and exit elasticsearch.yml.

Now start Elasticsearch:

Then run this command to start Elasticsearch on boot up:

Repeat these steps on all of your Elasticsearch hosts.

To check state from each of your Elasticsearch hosts, run the following command on each host:

You should see the following:

To check cluster state from your Elasticsearch hosts, run the following command:

You will see the following:

If you see output that is similar to this, your Elasticsearch cluster is running.

Step 4 - Installing Docker On Each Host

In this step, you’ll install Docker.

Add Docker’s official GPG key:

Use the following command to set up the stable repository.

Update the apt package index:

Make sure you are about to install from the Docker repo instead of the default Ubuntu 16.04 repo:

Install the latest version of Docker CE:

Docker should now be installed, the daemon started, and the process enabled to start on boot. Check that it is running:

Type <span class="code-class-custom">q</span> to return to the prompt.

Make sure you are in the Docker usergroup, replace tammy with your username:

Next we will create an Elasticsearch container.

Step 5 - Installing Gremlin On Each Host

After you have created your Gremlin account (sign up here) you will need to find your Gremlin Daemon credentials. Login to the Gremlin App using your Company name and sign-on credentials. These were emailed to you when you signed up to start using Gremlin.

Navigate to Team Settings and click on your Team.

Store your Gremlin agent credentials as environment variables, for example:

Next run the Gremlin Daemon in a Container.

Use docker run to pull the official Gremlin Docker image and run the Gremlin daemon:

Use docker ps to see all running Docker containers:

Jump into your Gremlin container with an interactive shell (replace b281e749ac33 with the real ID of your Gremlin container):

From within the container, check out the available attack types:

Step 6 - Installing the Datadog agent in a Docker container

To install Datadog in a Docker container you can use the Datadog Docker easy one-step install.

Run the following command, replacing the item in red with your own API key:

It will take a few minutes for Datadog to spin up the Datadog container, collect metrics on your existing containers and display them in the Datadog App.

View your Docker Containers in Datadog, you should see the following on the host Dashboard:

Step 7 - Running the Elasticsearch Split Brain Chaos Engineering Experiment

We will use the Gremlin CLI attack command to create a shutdown attack.

Now use the Gremlin CLI (gremlin) to run a shutdown attack against the host from a Gremlin container:

This attack will shutdown the Elasticsearch host where you ran the attack.

This triggers an issue referred to as Elasticsearch Split Brain which is documented below:

To check which Elasticsearch node is currently the master node in your cluster run the following:

You should see something similar to the following:

View the contents of the Elasticsearch Production Log on one of your running Elasticsearch hosts:

You may notice issues such as other nodes in the cluster stopping the Elasticsearch service when you run the Gremlin Shutdown attack, for example:

To prevent Elasticsearch Split Brain from occuring we will need to take additional steps described in Step 8.

Step 8 - Preventing Elasticsearch Split Brain

There are two common types of Elasticsearch nodes: master and data. Master nodes perform cluster-wide actions, such as managing indices and determining which data nodes should store particular data shards. Data nodes hold shards of your indexed documents, and handle CRUD, search, and aggregation operations. As a general rule, data nodes consume a significant amount of CPU, memory, and I/O.

By default, every Elasticsearch node is configured to be a "master-eligible" data node, which means they store data (and perform resource-intensive operations) and have the potential to be elected as a master node. An Elasticsearch cluster should be configured with dedicated master nodes so that the master node's stability can't be compromised by intensive data node work.

Ensure you have a cluster with 3 Elasticsearch nodes

First, restart the Elasticsearch master node that you shutdown in the previous step.

You will need 3 master nodes to run this Chaos Engineering experiment.

How to Configure Dedicated Master Nodes

Before configuring dedicated master nodes, ensure that your cluster will have at least 3 master-eligible nodes. This is important to avoid a split-brain situation, which can cause inconsistencies in your data in the event of a network failure.

To configure a dedicated master node, edit the node's Elasticsearch configuration:

Add the following lines:

The first line, node.master: true, specifies that the node is master-eligible and is actually the default setting. The second line, node.data: false, restricts the node from becoming a data node.

Save and exit.

Now restart the Elasticsearch node to put the change into effect:

Be sure to repeat this step on your other dedicated master nodes.

You can query the cluster to see which nodes are configured as dedicated master nodes with this command:

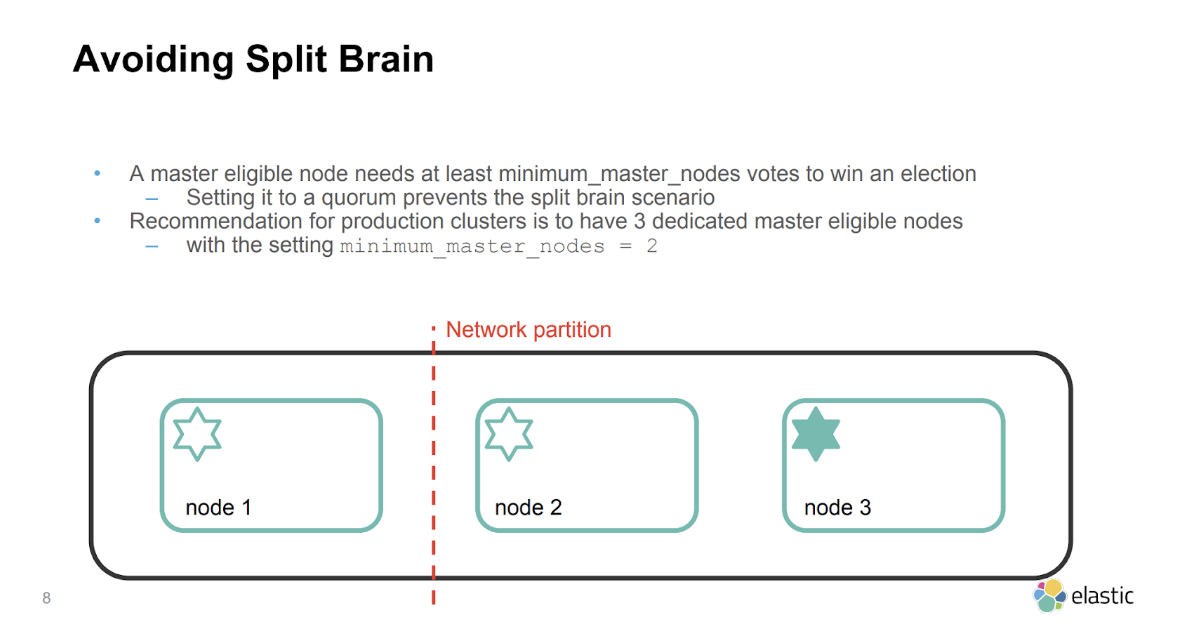

Configure Minimum Master Nodes

When running an Elasticsearch cluster, it is important to set the minimum number of master-eligible nodes that need to be running for the cluster to function normally, which is often referred to as quorum. This is to ensure data consistency in the event that one or more nodes lose connectivity to the rest of the cluster, preventing what is known as a "split-brain" situation. For example, for a 3-node cluster, the quorum is 2.

The minimum master nodes setting can be set dynamically, through the Elasticsearch HTTP API. Run this command on any node:

This setting can also be set as in /etc/elasticsearch.yml as:

If you want to check this setting later, you can run this command:

Running the Elasticsearch Split Brain Chaos Engineering Experiment After Config Changes

We will use the Gremlin CLI attack command to create a CPU attack.

Now use the Gremlin CLI (gremlin) to run a CPU attack from within a Gremlin container:

This attack will shutdown the Elasticsearch host.

To check which Elasticsearch host is currently the master node in your cluster run the following on any host:

You should see something similar to the following:

Now that you have resolved the Split Brain issue, when you shutdown a master you will find a message similar to the following in /var/log/elasticsearch/production.log:

Step 9 - Additional Chaos Engineering experiments to run on Elasticsearch

There are many Chaos Engineering experiments you could possibly run on your Elasticsearch infrastructure:

- Time Travel Gremlin - will changing the clock time of the host impact how Elasticsearch processes data?

- Latency & Packet Loss Gremlins - will they impact the ability to use the Elasticsearch API endpoints?

- Disk Gremlin - will filling up the disk crash the host?

We encourage you to run these Chaos Engineering experiments and share your findings! To get access to Gremlin, sign up here.

Conclusion

This tutorial has explored how to install Elasticsearch and Gremlin in Docker containers for your Chaos Engineering experiments. We then ran a shutdown Chaos Engineering experiment on the Elasticsearch container using the Gremlin Shutdown attack.

Avoid downtime. Use Gremlin to turn failure into resilience.

Gremlin empowers you to proactively root out failure before it causes downtime. See how you can harness chaos to build resilient systems by requesting a demo of Gremlin.

.svg)

.svg)

.svg)

This is an older tutorial

This is an older tutorial