The Ultimate Guide to Kubernetes High Availability

Learn how to improve Kubernetes availability and resiliency with testing and risk monitoring

Kubernetes high availability requires a new approach to reliability

There’s more pressure than ever to deliver high-availability Kubernetes systems. Consumers expect applications to be available at all times and have zero patience for outages or downtime. At the same time, businesses have created intricate webs of APIs and dependencies that rely on your applications. These customers and business need your Kubernetes deployments to be reliable.

Unfortunately, building reliable systems is easier said than done. Every system has potential points of failure that lead to outages—also known as reliability risks. And when you’re dealing with the complex, ephemeral nature of Kubernetes, there’s an even higher possibility that risks will go undetected until they cause incidents.

If you want to deliver highly available and reliable Kubernetes deployments, you need to change your approach to reliability.

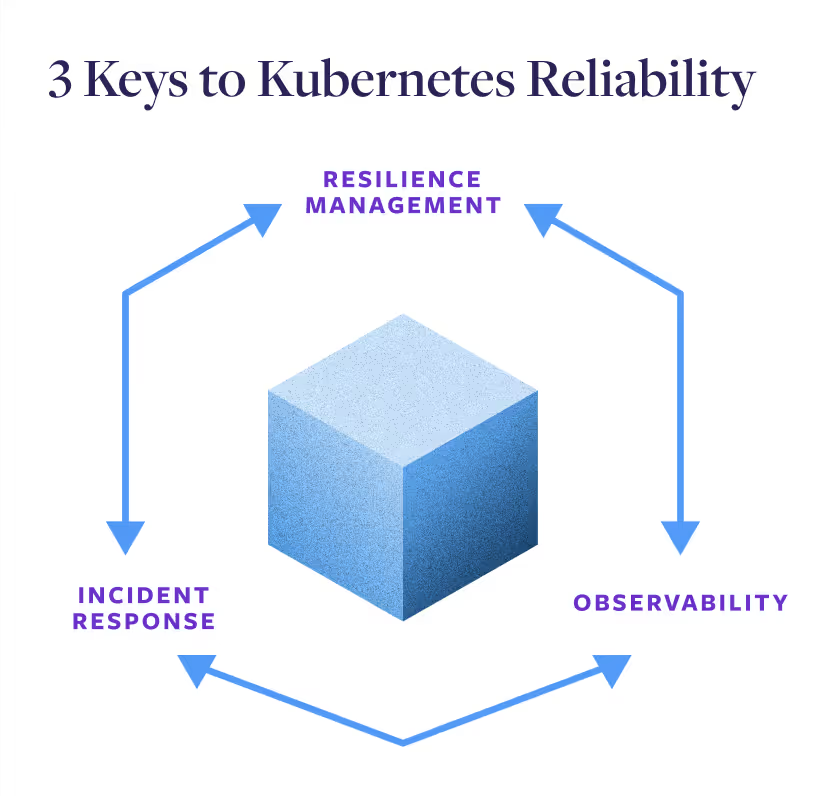

The traditional reliability approach starts with using observability to instrument your systems. Any issue or non-optimum spike in your metrics creates an alert, which is then resolved using your incident response runbook.

This reactive approach only surfaces risks after the failure has occurred. It creates a gap between where you think the reliability of your system is, and where you find out it actually is when there’s an outage.

In order to meet the Kubernetes high availability demands of your users, you need to fill in that gap with a managed, standards-based approach to your system’s resiliency.

By managing the resiliency of your Kubernetes redeployment, you can surface reliability risks proactively and address them before they cause outages and downtime.

Working together, these three practices allow your teams to get visibility into the performance of their deployments, proactively detect risks, and quickly resolve any failures that slip through the cracks—raising the reliability and availability of your system.

This article gives you a framework to build your own resiliency management practice for Kubernetes. By using the framework and practices below, you’ll be able to improve your Kubernetes availability by preventing incidents and outages, accelerate key IT initiatives by improving your Kubernetes reliability posture, and shift-left reliability by integrating resiliency testing into your Software Development Lifecycle (SDLC).

Availability vs. Resiliency vs. Reliability

Customers and leadership often think in terms of availability, which comes from efforts to improve the resiliency and reliability of systems.

- Availability - A direct measure of uptime and downtime. Often measured as a percentage of uptime (e.g. 99.99%) or amount of downtime (e.g. 52.60 min/yr or 4.38 min/mo). This is a customer-facing metric mathematically computed by comparing uptime to downtime.

- Resiliency - A measure of how well a system can recover and adapt when there’s disruptions, increased (or decreased) errors, network interruptions, etc. The more resilient a system is, the more it can respond correctly when changes occur.

- Reliability - A measure of the ability of a workload to perform its intended function correctly and consistently when it’s expected to. The more reliable your systems, the more you and your customers can have confidence in them.

Reliability determines the actions your organization takes to ensure systems perform as expected, resiliency is how you improve the ability of your systems to respond as expected, and availability is the result of your efforts.

Kubernetes deployment reliability risks

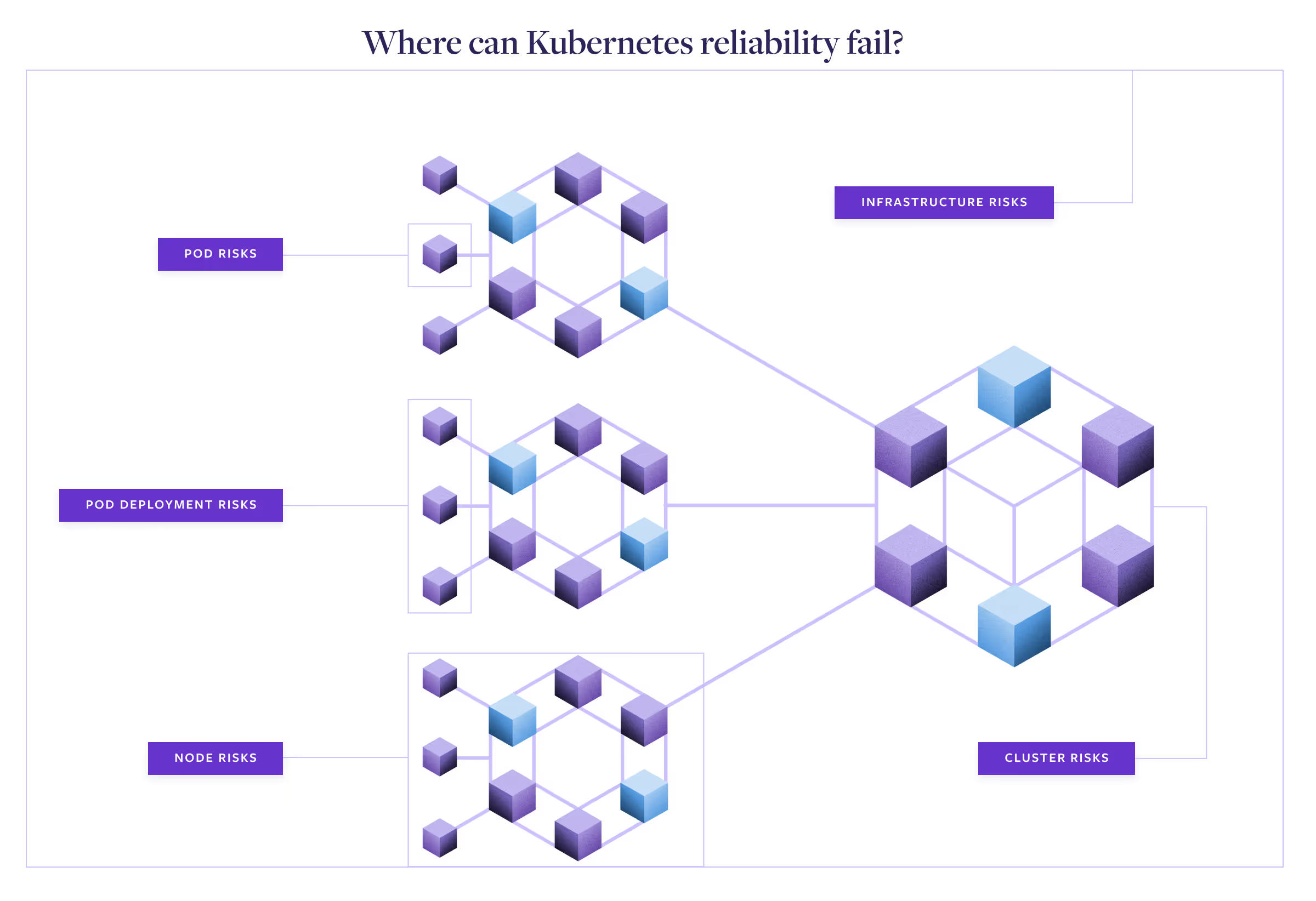

Kubernetes is deployed in a series of layers that are key to its resiliency and adaptability. The layers of pods, nodes, and clusters provide distinct separation that’s essential for being able to scale up or down and restart as necessary while maintaining redundancy and availability.

Unfortunately, these same layers also increase the potential points of failure. Their interconnected nature can take a small error, such as a container consuming more CPU or memory than expected, then compound it across other nodes and cause a wide-scale outage.

The first step to improve Kubernetes availability is to look at the potential reliability risks inherent in every Kubernetes deployment. As you build resiliency standards for your Kubernetes deployments, you’ll want to account for these reliability risks.

Underlying Kubernetes infrastructure reliability risks

Every software application is dependent on the infrastructure below it for stability, and Kubernetes is no exception. These include risks like:

- Power outages

- Hardware failures

- Cloud provider outages

Architecting to minimize these risks includes Availability Zone redundancy, multi-region deployments, and other best practices. You’ll need to be able to test whether these practices are in place for your system to be resilient to these failures.

Kubernetes cluster reliability risks

Clusters lay out the core policies and configurations for all of the nodes, pods, and containers deployed within them. When reliability risks occur at the cluster level, these often become endemic to the entire deployment, including:

- Misconfigured control plane nodes

- Autoscaling problems

- Unreliable or insecure network connections between nodes

Detecting and testing for the risks will include looking at your cluster configuration, how it responds to increases in resource demands, and its response to changing network activity.

Kubernetes node reliability risks

Any reliability risks in the nodes will immediately inhibit the ability to run pods. While cloud-hosted Kubernetes providers can automatically restart problem nodes, if there’s core issues with the control plane, the problem will just be replicated again. These issues could include:

- Automatic or unscheduled reboots

- Kernel panics

- Problems with the kubelet process or other Kubernetes-related process

- Network connectivity problems

Node-based reliability risks are usually focused around the ability of the node to communicate with the rest of the cluster, get the resources it needs, and correctly manage pods.

Kubernetes pod deployment reliability risks

The node may be configured correctly, but there can still be issues and risks related directly to deploying the pods itself within the node, such as:

- Misconfigured deployments

- Too few (or too many) replicas

- Missing or failing container images

- Deployment failures due to limited cluster capacity

These risks are tied directly to the ability (or inability) of nodes to correctly deploy pods within them, and are often tied to limited resources or finding/communicating with container images.

Kubernetes pod reliability risks

Even if everything goes well with pod deployment, there can still be issues that affect the reliability of the application running within the pods, including:

- Application crashes

- Source code errors

- Unhandled exceptions

- Application version conflicts

Many of these “last-mile” risks can be uncovered by monitoring and testing for specific Kubernetes states, such as CrashLoopBackOff or ImagePullBackOff.

How to determine critical Kubernetes reliablility risks

Unfortunately, there’s never enough time or money to fix every single reliability risk. Instead, you need to find that balance where you address the critical reliability risks that could have the greatest impact and deprioritize the risks that would have a minor impact. When you consider the number of moving pieces and potential reliability risks present in Kubernetes, this kind of prioritization and identification becomes even more important.

The exact list will change from organization to organization and service to service, but you can start by looking at some of the core resiliency features in Kubernetes:

- Scalability - Can the system quickly respond to changes in demand?

- Redundancy - Can applications keep running if part of the cluster fails?

- Recoverability - Can Kubernetes recover if something fails?

- Consistency/Integrity - Are pods using the same image and running smoothly?

Any issue that interferes with these capabilities poses a critical risk to your Kubernetes deployment. Resiliency management takes a systematic approach to surfacing these reliability risks across your organization.

Finding and resolving risks at scale needs a standardized approach

Kubernetes and rapid-deployment Software Development Lifecycles (SDLCs) go hand in hand. At the same time, the interconnected nature of Kubernetes means that building reliability requires clear standards and governance to ensure uniform resiliency across all your various services.

Traditionally, these two practices have been at odds. Governance and heavy testing gates tend to slow down deployments, while a high-speed DevOps approach stresses a fast rate of deployment and integration.

If you’re going to bring the two together, you need a different approach, one based on standards, but with automation and technological capabilities that allow every team to uncover reliability risks in any Kubernetes deployment with minimal lift.

The approach needs to be able to surface known risks from all layers of a Kubernetes deployment, as well as uncover unknown, deployment-specific risks. Just as importantly, this practice needs to include standardized metrics and processes that can be used across the organization so all Kubernetes deployments are held to the same reliability standards.

How to deploy resilient Kubernetes clusters at scale

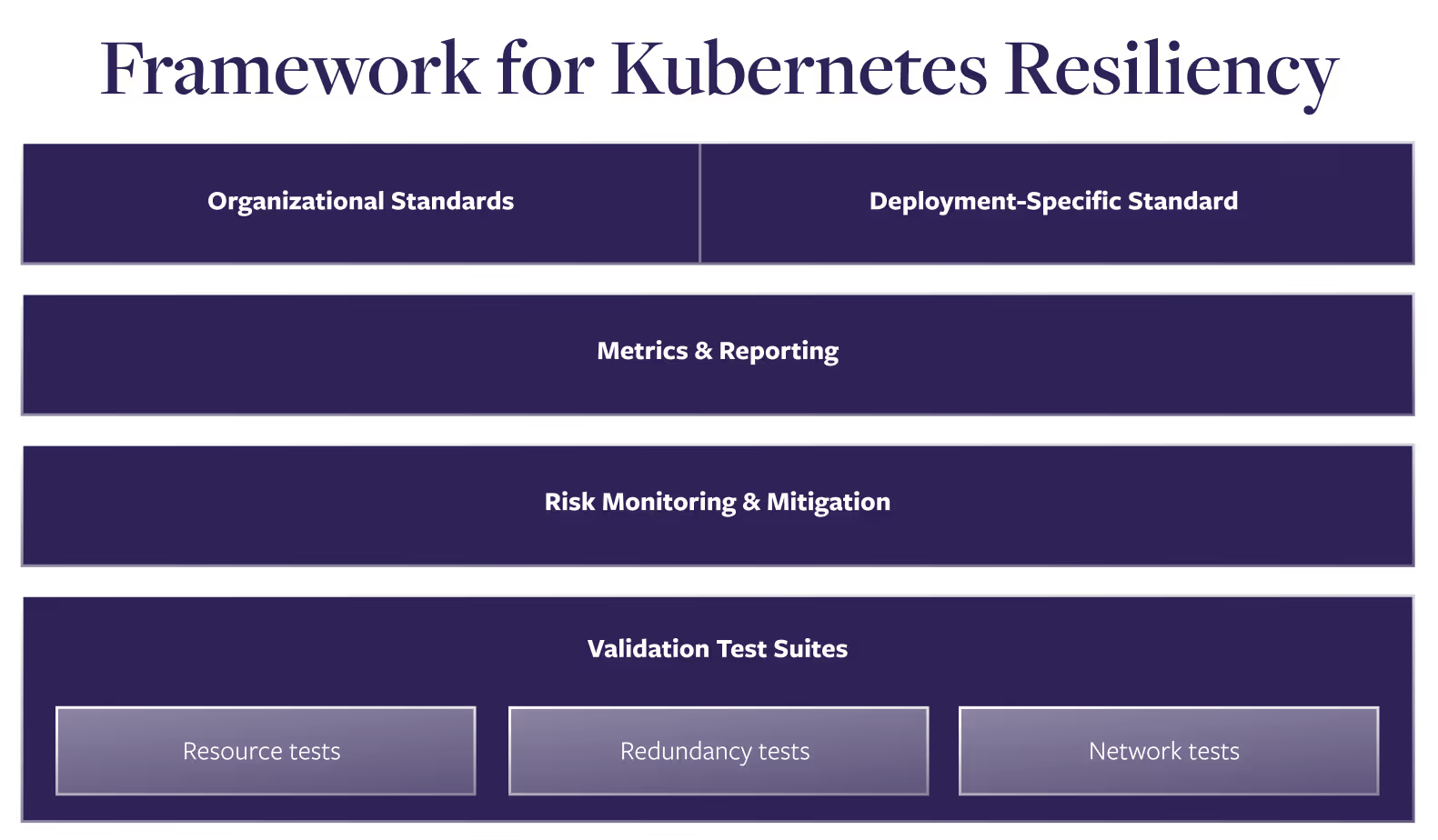

Any approach for Kubernetes resiliency would have to combine the known possible reliability risks above with the criteria for critical risk identification and organization-wide governance.

When these are paired with the technology of Fault Injection testing, it creates a resiliency management framework that combines automated validation testing, team-based exploratory testing, and continuous risk monitoring with the reporting and processes to remediate risks once found.

The framework consists of four distinct sections.

1. Kubernetes resiliency standards

Some reliability risks are common to all Kubernetes deployments. For example, every Kubernetes deployment should be tested against how it’s going to respond during a surge in demand for resources, a drop in network communications, or a loss of connection to dependencies.

These are recorded under Organizational Standards, which inform the standard set of reliability risks all of your teams should test against. While you start with common reliability risks, this list should expand to include risks unique to your company that are common across your organization. For example, if every service connects to a centralized database, then you should standardize around testing what happens if there’s latency in that connection.

Deployment-specific standards are deviations from the core Organizational Standards for specific services or deployments. The standards can be stricter or looser than organizational standards, but either way, they’re exceptions that should be noted. For example, an internal sales tool might have a higher latency tolerance for connecting to a database because your team is more willing to wait, while an external checkout service might be required to move to a replicated copy of the database faster than normal to avoid losing sales.

2. Kubernetes reliability metrics and reporting

Reliability is often measured by either the binary “Currently up/currently down” status or the backwards-facing “uptime vs. downtime” metric. But neither of these measurements will help you see the posture of potential reliability risks before they become outages—and whether you’ve addressed them or gained more risks over time.

This is why it’s essential to have metrics, reporting, and dashboards that show the results of your resiliency tests and risk monitoring. These dashboards give the various teams core data to align around and be accountable for results. By showing how each service performs on tests built against the defined resiliency standards, you get an accurate view of your reliability posture that can inform important prioritization conversations.

Find out How to measure Kubernetes cluster reliability

3. Kubernetes risk monitoring and mitigation

Some Kubernetes risks, such as missing memory limits, can be quick and easy to fix, but can also cause massive outages if unaddressed. The complexity of Kubernetes can make it easy to miss these issues, along with other known reliability risks common across all Kubernetes deployments, which means you can operationalize their detections.

Many of these critical risks can be located by scanning configuration files and containers statuses. These scans should run continuously on Kubernetes deployments so these risks can be surfaced and addressed quickly.

Learn how to monitor Kubernetes reliability risks—and how to automatically detect the top ten most critical risks.

4. Kubernetes validation resilience testing using standardized test suites

Utilizing Fault Injection, resiliency testing safely creates fault conditions in your deployment so you can verify that your systems respond the way you expect them to, such as a spike in CPU demand or a drop in network connectivity to key dependencies.

Using the standards from the first part of the framework, suites of reliability tests can be created and run automatically. This is a validation testing approach that uncovers places where your systems aren’t meeting standards, and the pass/fail data can be used to create metrics that show your changing reliability posture over time.

With validation testing, you have a standard, state, or policy that you’re testing against to validate that your system meets the specific requirements. For example, if a pod reaches its CPU limit, is a new pod spun up to take the extra load? In this case, you inject the fault and verify that your system reacts the way it’s supposed to.

It’s important to note that this is different from exploratory testing, which is the kind of testing commonly done as part of Chaos Engineering. With exploratory testing, you’re aiming to uncover specific unknown failures, such as what happens if an init container is unavailable for 5 minutes. Does the pod load without it, or does it crash? Exploratory testing should be done by someone trained in how to safely define the experiment to minimize the impact, such as with a Chaos Engineering certification.

Learn more:

- Validation and resilience testing for Kubernetes clusters

- Getting started with Chaos Engineering on Kubernetes

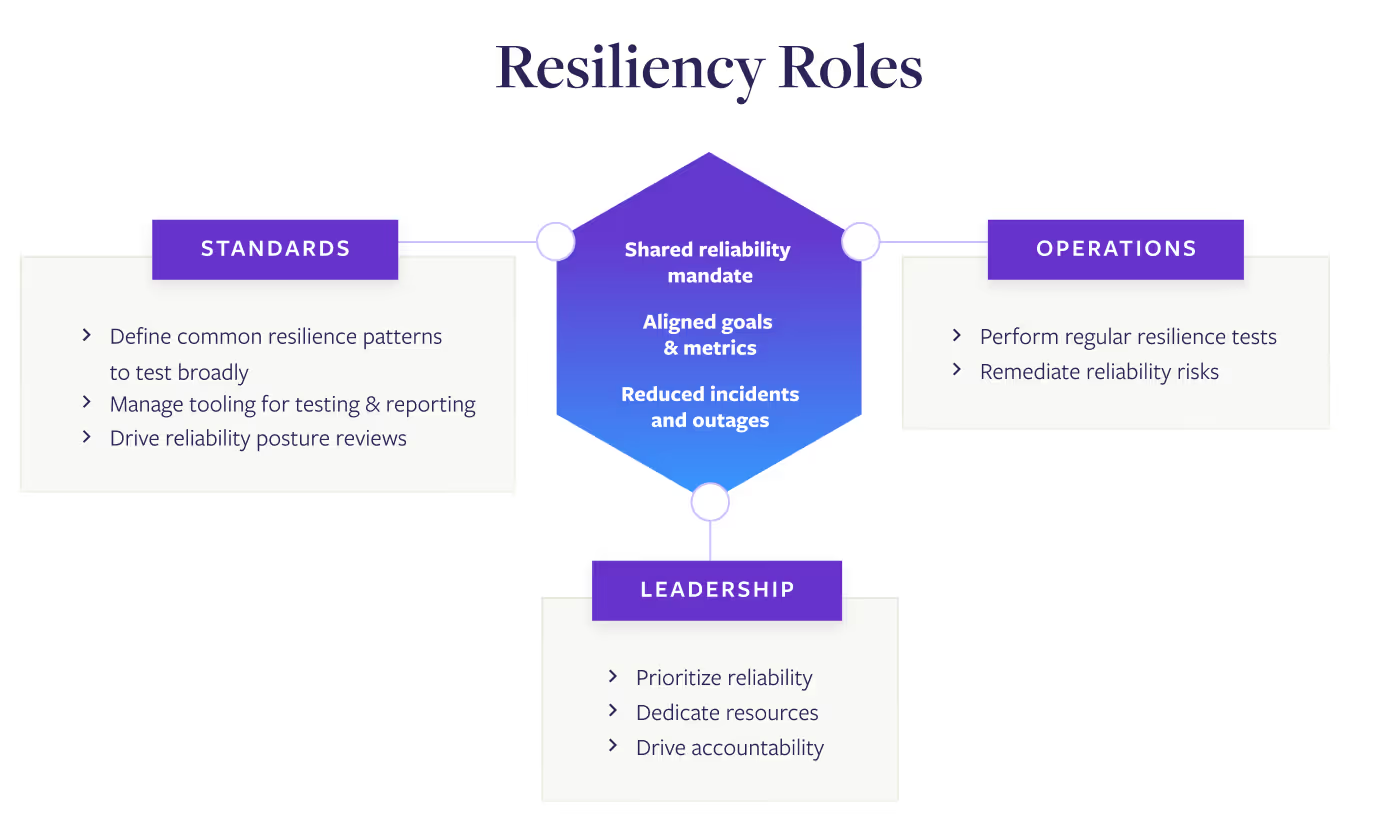

Team roles for improving Kubernetes resiliency

Any successful Kubernetes resilience effort requires contributions from three key roles. Everyone working on resiliency falls into one of these three roles, which are sorted by their responsibilities within the framework:

- Leadership roles create prioritization and allocate resources to resiliency management.

- Standards roles set standards, manage tooling, and oversee the execution of the framework.

- Operations roles perform tests on services and remediate reliability risks.

The roles aren’t tied to specific titles, and it’s common for one person or team to take on two of the roles. For example, performance engineering teams or centralized SRE teams often take on both setting the standards and performing tests and mitigations. Without someone stepping in to take on the requirements of each role, teams struggle to make progress and reliability efforts fail.

Kubernetes leadership

The leadership role is the one responsible for setting the priorities of engineering teams and allocating resources. In some companies this is held by someone in the C-suite, while in others it’s held by Vice Presidents or Directors. The defining factor is that anyone in this role has the authority to make organization-wide priorities and direct resources towards them.

Core Responsibilities:

- Dedicate resources to reliability

Most reliability efforts fail due to a lack of prioritization from the organization. For your resilience practice to be effective, leadership roles need to allocate resources to it. - Ensure standards create business value

Work with those in the standards roles to make sure resiliency standards and goals tie directly back to business value. Try to find the balance where the time, money, and effort spent finding and mitigating reliability risks is creating far more value than it takes in resources. - Drive accountability and review metrics dashboards

When leadership is visibly engaged in reviewing reliability metrics, it lends importance to the efforts, which, in turn drives action. Leadership should hold operators accountable for improving resiliency—and applaud them when they do.

Kubernetes standards role

The standards role is responsible for driving the Kubernetes resilience efforts across the organization. They own the standards, tooling, and organizational processes for executing the framework. In some organizations, this role is in centers of excellence, such as SRE or Platform Engineering teams, while in others this role is added to an existing role like Kubernetes architects.

Core Responsibilities:

- Define reliability standards

Reliability standards should be based on a combination of universal best practices, organizational reliability goals, and unique deployment reliability risks. These should be consistent across the organization, with any service-specific deviations (such as those discovered through exploratory testing) documented. - Manage tooling for testing & reporting

By centralizing testing and reporting tooling with the standards role, tests can be automated to minimize the lift by individual teams and metrics can be compiled to make it easier to align around reliability and prioritize fixes. - Determine standardized validation test suites

Reliability test suites are a powerful tool for creating a baseline of resiliency across your organization. The standards role should define these, then integrate them into tooling so teams can automate running them. - Owning operationalization processes

Metrics should be regularly reported and reviewed in meetings where the reliability posture is reviewed, then any fixes are prioritized. Whether these are standalone meetings or integrated into existing meetings, the standards role should own and run these review processes.

Kubernetes operator role

This role could have a wide variety of titles, but the defining characteristics are that they’re responsible for the resiliency of specific services. They make sure the tests are run, report the results, and make sure any prioritized risks are addressed.

Core Responsibilities:

- Run tests and report on results

Once the initial agents or setup is done, testing should be automated to make this a lighter lift. As part of the prioritization and review meetings, operators will need to make sure the test results are reported and speak to any discussion about them. - Respond to risks detected by monitoring

Risks detected by monitoring can often be fixed with a change to the configuration or other lighter-lift fixes. In these cases, operators should be empowered to quickly address these risks to maintain Kubernetes resiliency. - Address and mitigate reliability risks

Once reliability risks have been prioritized, operators are responsible for making sure the risk is fixed. They may not be the person to perform the actual work, but they should be responsible for making sure any risks are addressed, then testing again to verify the fixes.

What makes a best-in-class reliability practice?

A best-in-class reliability practice extends across teams to improve the resiliency and availability of your systems. At the same time, it enables engineering teams to spend less time fighting fires and resolving incidents so they can focus on vital work like new features or innovations.

Gremlin has worked with reliability program leaders at Fortune 100 companies to identify the traits of successful programs. Reliability programs built around these four pillars and 18 traits align organizations, get crucial buy-in, and achieve real, measurable improvements to the reliability of their systems.

Find out what it takes to build an effective reliability effort with the How to Build a Best-in-Class Reliability Program checklist.

Next steps: Your first 30 days of Kubernetes resiliency

Your Kubernetes resiliency management practice will mature, grow, and change over time, but it doesn’t have to take months to start creating results. In fact, you can uncover reliability risks and having a demonstrable impact on your Kubernetes availability with this roadmap for your first 30 days.

1. Choose the services for your proof of concept

Leadership and teams can be hesitant to roll out new programs across the entire organization, and understandably so. Many resiliency efforts start with a few services to show its efficacy before it can be more widely adopted.

You can speed the process along by getting alignment on a specific group of services being used for the pilot. These are your early-adoptor services, and the more you have everyone involved on board, the better results you’re going to get.

In fact, having a small group of teams who are invested and focused can often be more effective than trying with a wider, more hesitant group right out of the gate. Once you start proving results with early adopters, then you’ll get less resistance as you roll the program out more broadly.

When choosing these services, you should start with ones that are important to your business to provide the greatest immediate value. Dependencies are a common source of reliability risks, so a good choice is to start with central services that have fully-connected dependencies. You could also select services that are fully loaded with production data and dependencies, but aren’t launched yet, such as services during a migration or about to be launched. The last common choice is services that are already having reliability issues, thus allowing you to prove your effectiveness and address an area of concern at the same time.

2. Set up your systems for risk monitoring and testing

Fault Injection requires a tool to be integrated into your Kubernetes deployment. If you’re building your own tool, this can be pretty complicated, but a reliability platform like Gremlin streamlines the process of installing agents and setting up permissions.

Once the agent is set up, you’ll want to define your risk monitoring parameters and core validation test suites. A good place to start is with the critical risks from Chapter 4 and the test suites from Chapter 5. (Gremlin has these set up as default test suites for any service.)

Generally, these core risks and test suites are a good place to start, then you can adjust them as you become more comfortable with testing. But you can also alter these test suites to fit unique standards for your organization or to include a test that validates resilience to specific issues, such as ones that recently caused an outage.

3. Use risk monitoring to detect Kubernetes risks

Almost every Kubernetes system has at least one of the critical risks above. Since risk monitoring uses continuous detection and scanning rather than active Fault Injection testing, it’s a faster, easier way to uncover active critical reliability risks.

Follow these steps to quickly find risks, fix them, and prove the results:

- Install the agent or tool in your Kubernetes cluster.

- The scan will return a list of reliability risks, along with a mitigation status.

- Work with the team behind the service to address unmitigated risks. Most of these, such as missing memory limits, can be a relatively light lift to fix.

- Deploy the fix and go back to the monitoring dashboard. Any risk you addressed should be shown as mitigated.

- Congratulations! You’ve made your Kubernetes deployment more reliable.

Since risk monitoring is automated and non-invasive, this is also an easier way to spur adoption of resiliency management with other teams. Show those teams the results you were able to create, then help them to set up their own risk monitoring.

4. Use validation testing for a baseline reliability posture report

Now that you’ve addressed some of the more pressing reliability risks, it’s time to start running Fault Injection tests. Run the validation test suites you set up to get a baseline report for the reliability posture of your early-adopter services.

These first results will usually return a lot of existing reliability risks, which can be a good thing. It means your resiliency testing is effectively uncovering reliability risks before they cause outages.

Once you have your current reliability posture, work with the operators of those services to prioritize the risks. Most services will have urgent risks that are more likely and could have a big impact if they cause an outage and minor risks that are less likely and have a smaller impact.

Your goal at this stage is to select a few high-priority risks and remove them from your deployment.

5. Address high-priority risks, then verify your fixes

After the operators have had a chance to address the issues, run the same test suites again to see if the fixes were successful. Once you’ve verified the fixes, gather up the results and review them with the rest of your team.

You should have a list of critical Kubernetes risks that you’ve addressed, and by looking at the before and after results from risk monitoring and validation tests, you’ll be able to show the effectiveness of your resiliency efforts—and show exactly how you’ve improved the reliability of your Kubernetes deployment.

Do it all with a 30-day trial from Gremlin

Gremlin offers a free trial that includes all of the capabilities you need to take the actions above. Over the course of four weeks, you’ll be able to stretch your resiliency wings, prove the effectiveness of your efforts, and have a lasting impact on the reliability of your Kubernetes deployment.

Download the comprehensive eBook

Learn how your own resiliency management practice for Kubernetes in the 55-page guide Kubernetes Reliability at Scale: How to Improve Uptime with Resiliency Management

Thanks for requesting

Kubernetes Reliability at Scale:

How to Improve Uptime with Resiliency Management.

(A copy has also been sent to your email.)