Reliability Intelligence

Supported platforms:

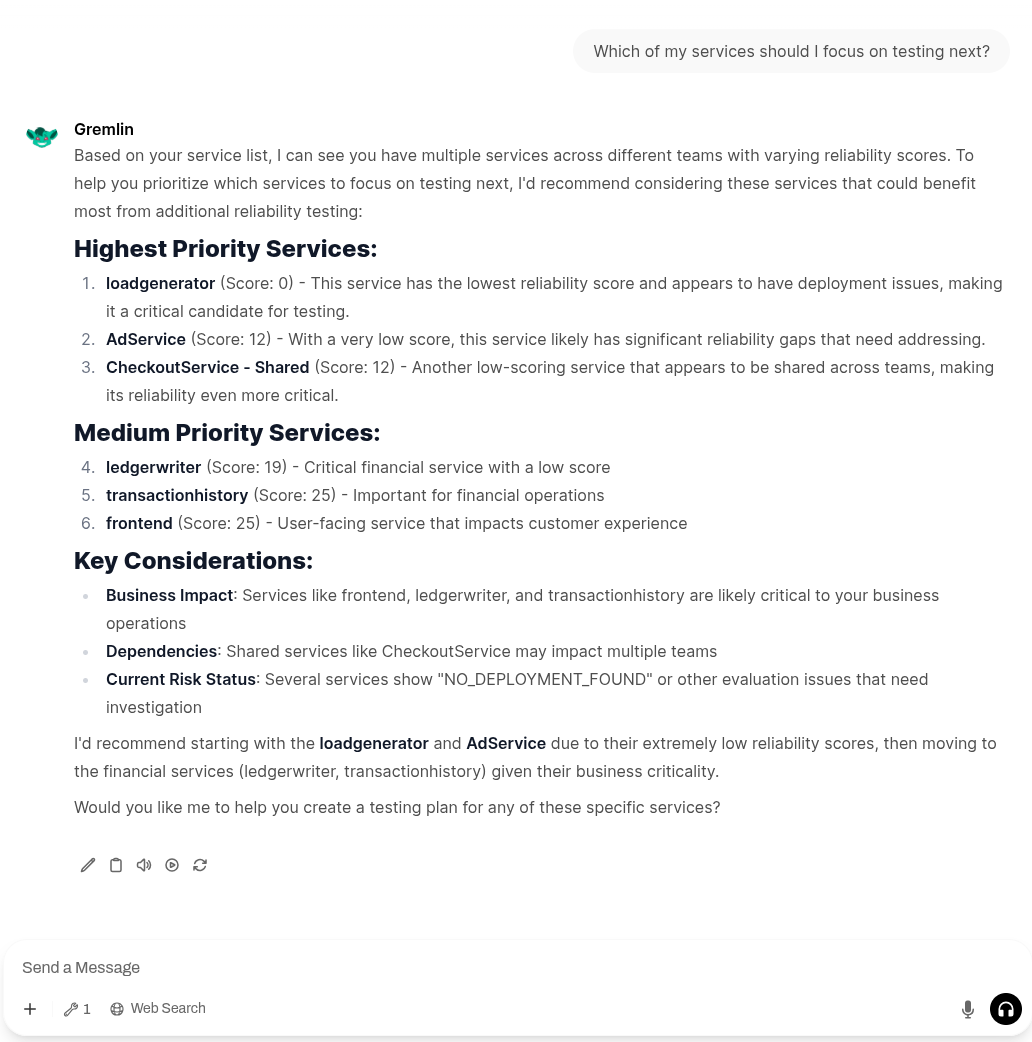

Reliability Intelligence analyzes your Gremlin environment to provide real-time reliability insights and recommendations. This includes identifying failed reliability tests and offering step-by-step instructions to remediate the underlying problems. Gremlin also provides a Model Context Protocol (MCP) server, which lets you integrate Gremlin into your LLM or AI service of choice.

How Reliability Intelligence works

Reliability Intelligence works automatically to uncover reliability risks in your Gremlin organization and provide recommended resolutions.

Experiment analysis and remediation

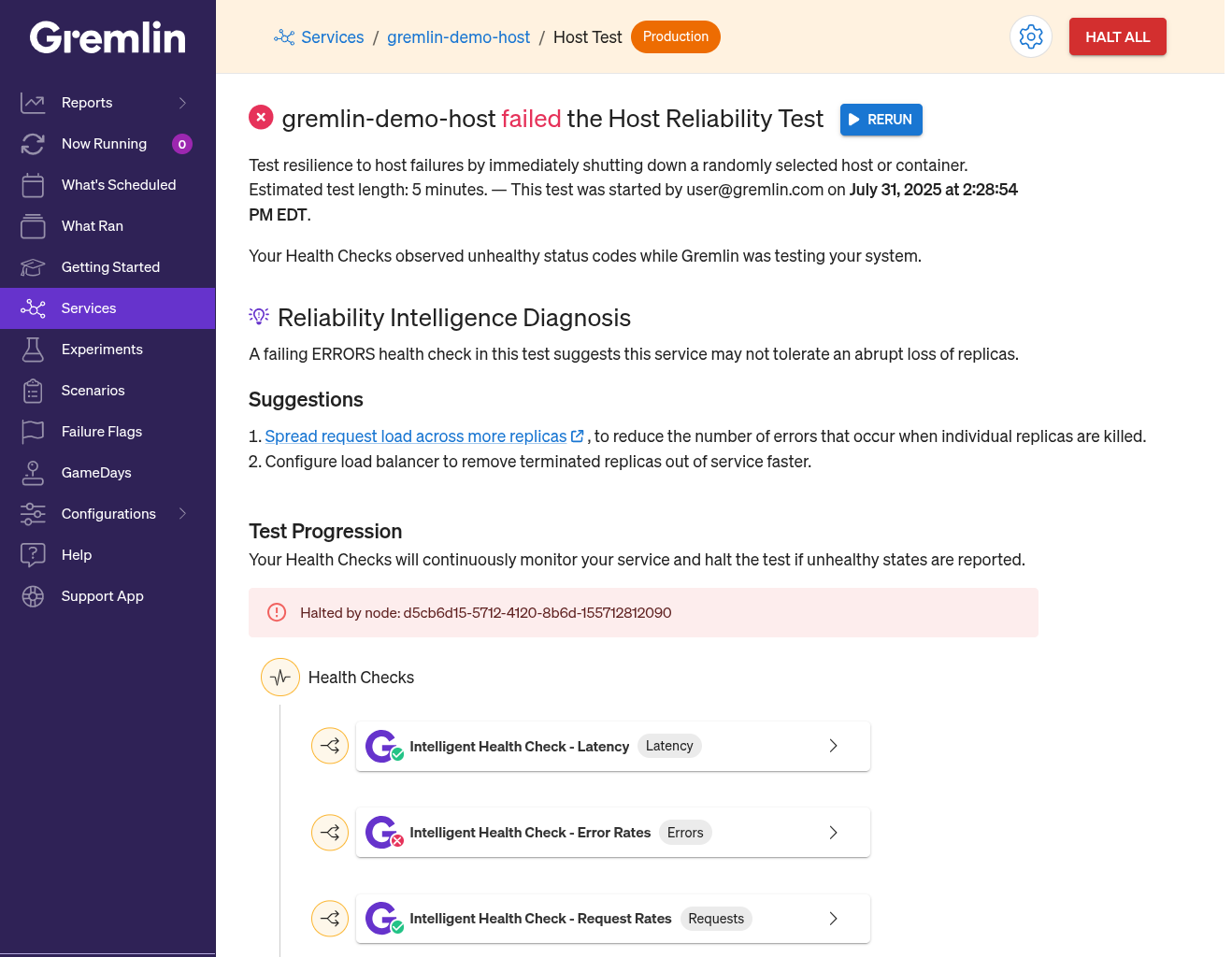

Reliability Intelligence can determine the root cause of failed tests and suggest remediations tailored to your service. It uses context from your service, including:

- The type of test that was run.

- The type of service that the test ran on.

- The Health Check that raised the error.

- Any unusual events during the test (such as an out-of-memory event).

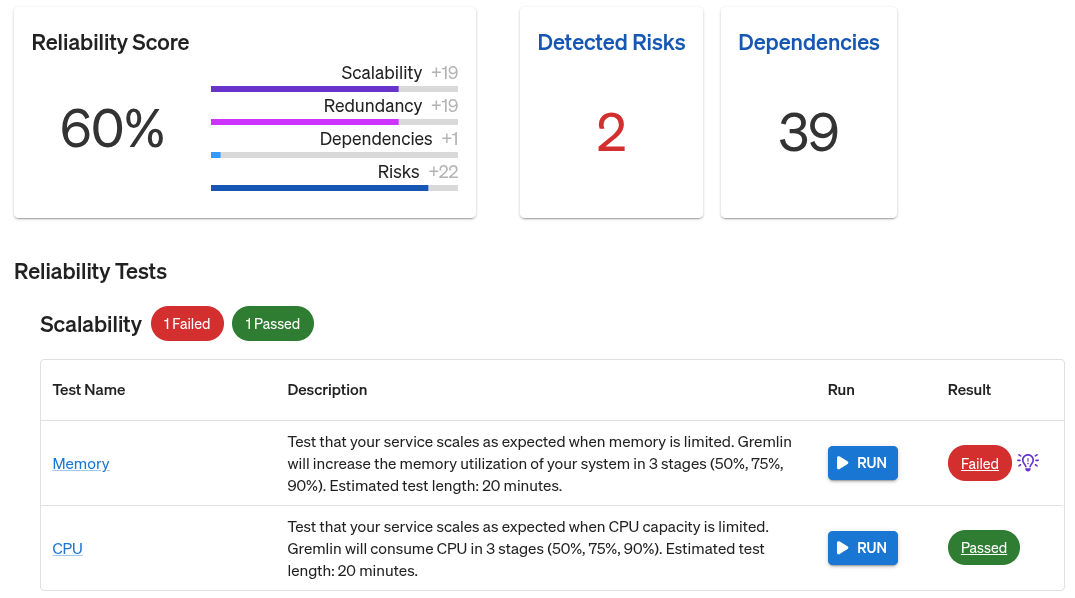

For example, here is a Kubernetes deployment that failed its Memory Scalability test. The icon next to the “failed” result means Reliability Intelligence has insight into this test result.

In this case, Kubernetes’ out-of-memory (OOM) killer terminated one of the pods in the deployment, which in turn increased the error rate. Gremlin diagnosed that these two events are related and that we can fix the problem by increasing our replica count or reserving more memory in advance.

Enabling Reliability Intelligence

Reliability Intelligence is enabled by default.

Enabling LLM access for Reliability Intelligence

You can optionally grant Gremlin permission to use a large language model (LLM) for Reliability Intelligence. This grants you access to more detailed diagnoses and recommendations as we improve Reliability Intelligence.

To enable LLM access:

- Click on this link to open the Company Settings page.

- Next to Advanced, click Edit.

- Select the checkbox next to Allow LLM access.

- Click Save.

To disable LLM access, repeat steps 1 and 2, uncheck the box, and click Save.

Using the Gremlin MCP Server

Gremlin provides a model context protocol (MCP) server, which lets you interact with your Gremlin account using your choice of AI agent. To use the Gremlin MCP server, you will need:

- A Gremlin account and REST API key.

- An AI or LLM interface that can run MCP servers, such as Claude Desktop.

See the GitHub repository for detailed instructions on configuring and using the Gremlin MCP server.