Reliability lessons from the 2025 AWS DynamoDB outage

On October 19th and 20th, 2025, the AWS region US-EAST-1 suffered a massive outage. What started with a 3-hour Amazon DynamoDB outage from a DNS issue led to an Amazon EC2 outage that lasted an additional 12 hours before normal service was restored. Over the course of the outage, there were over 17 million outage reports as companies like Snapchat, Roblox, Amazon, Reddit, Venmo, and more were impacted.

Unfortunately, infrastructure outages like this are going to happen eventually. No matter how good the maintenance and updates, things will break, storms will flood data centers, or automated DNS systems will make errors. Frankly, it speaks to the incredible effort, investment, and skill of cloud providers and their engineers that outages don’t happen more often.

Everything fails all the time.” — Werner Vogels, Amazon CTO

The question is: what can your company do to minimize, or even prevent, the impact of provider outages like these?

Because, ultimately, when your application doesn’t respond, your customers aren’t going to blame AWS. They’re counting on you to make sure your application is reliable, so it’s worth the effort to make sure you’re prepared for cloud provider outages.

Map and test your dependencies (including DynamoDB)

Modern complex systems are massive webs of internal and external dependencies. An individual service can easily have dozens of dependencies, and each of those, in turn, can have a dozen more. These dependencies can include everything from observability agents to validation services to databases, which often means DynamoDB.

That’s really one of the key lessons from this outage. Many of the applications that ran into issues during the outage had DynamoDB in US-EAST-1 as a dependency. When it suddenly wasn’t available, the apps went down. And when your application is running on the cloud, you’re going to find a lot of dependencies rooted in cloud provider services.

Making sure you’re prepared for dependency outages boils down to two parts:

- Know the dependencies of your service

- Know how your service reacts if those dependencies are unavailable

Part of this exercise also includes determining which dependencies are critical and which aren’t. When a non-critical dependency is unavailable, then the service should still be able to function, though usually with limited capabilities or performance.

Critical dependencies, on the other hand, will take down a service if unavailable. With these, you’ll have to make key decisions as an organization.

For example, you may have a critical dependency on DynamoDB in US-EAST-1, but full redundancy on AWS or with another cloud provider could easily double the cost. Is that worth it to your organization? What about a lower performance, cheaper, “just in case” backup option. Would that be worth it? And if they aren’t, then what’s the incident response playbook for when the dependency goes down?

You need to be able to have these conversations before the outage occurs. Then on the off-chance an outage does happen, your team and your system will be prepared, whether it’s automatic failover to a redundant region, firing up a backup, or running through the playbook.

Test your infrastructure (including EC2)

The second part of the October outage was the impact on spinning up new EC2 instances. While EC2 instances spun up before the event stayed healthy, for about 12 hours after the DynamoDB issue was resolved, customers ran into issues spinning up new EC2 instances in US-EAST-1.

To make sure you’re prepared for these kinds of outages, make sure you know how your system reacts to resources in specific zones behind unavailable.

And that really comes down to redundancy. We’ve all heard it before, and it’s even a big part of the Well-Architected Framework. But even if you’ve designed your systems for multi-zone or multi-region redundancy, you need to make sure everything rolls over correctly.

After all, the worst time to find out the configurations are wrong is during an outage, and running a test ahead of time allows you to make sure everything functions correctly.

How to recreate similar failures with Gremlin

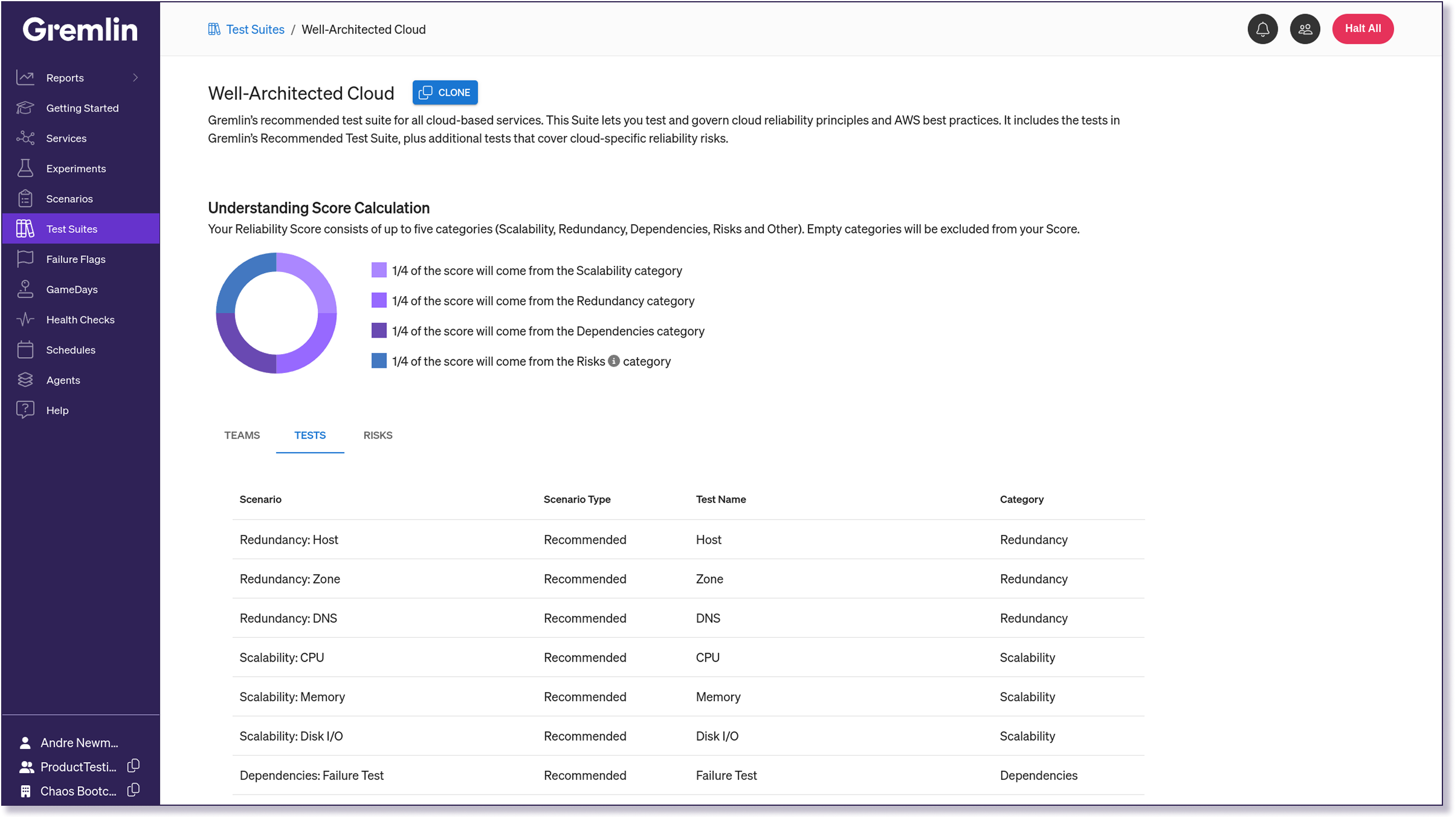

While an AWS outage is rare, dependency failure and resource scaling/redundancy issues are two of the most common failures behind outages. Using Gremlin, you can create custom experiments to recreate these failures, but they’re also part of both the Recommended Test Suite and the Well-Architected Cloud Test Suite.

Dependency Discovery

Before you start testing, you’ll want to make sure you know all of the dependencies for your service. When you set up a service with Gremlin, it will use Dependency Discovery to automatically uncover any dependencies by monitoring a service’s network traffic and DNS requests.

Usually, this will identify unknown dependencies, and this full visibility helps teams make sure they’re testing all of the possible failure modes.

Dependencies: Failure Test

While the actual issue behind the outage was that DynamoDB in US-EAST-1 was unavailable, you obviously can’t go to AWS and ask them to shut off DynamoDB so you can test your systems. Fortunately, you don’t have to. Whether the outage is at an AWS data center or the wrong network cable is cut by a backhoe, it means the same thing to your application: DynamoDB is unavailable.

We can simulate this for any dependency by cutting all network traffic to and from that dependency, including DynamoDB. In Gremlin, this is done by selecting an identified dependency, setting the unavailability time (e.g. 10 minutes), then running a Dependency Failure test.

Redundancy Test: Zone

To recreate the EC2 outage, we’ll want to look at resource tests to test redundancy. With Gremlin, a Redundancy Test follows similar logic as the failure test above to make specific resources unavailable by cutting off network traffic to hosts, zones, and DNS servers.

Now, you might be tempted to try the DNS test. After all, the DynamoDB issue was caused by a DNS issue. However, AWS experienced the DNS issue, not your application. All your application would see is that DynamoDB isn’t available and that EC2 isn’t reachable for new servers. Running a DNS server test from your end will verify what happens when your service can’t access a DNS server, but that won’t recreate this outage.

Instead, use the Zone redundancy test to make a zone unavailable. This completely disconnects your application from dependencies running in the zone so you can ensure they failover correctly.

Testing means you’re prepared

The biggest lesson from major outages like these is the importance of making sure your teams are prepared and informed.

By testing dependencies and redundancy, your teams get visibility into how your systems and how they’ll react. You’ll be able to address the risks you’re willing to invest in, and plan for the ones you’re not.

And then the next time an outage like this happens, your team won’t be caught off guard.

Gremlin's automated reliability platform empowers you to find and fix availability risks before they impact your users. Start finding hidden risks in your systems with a free 30 day trial.

sTART YOUR TRIALHow to be prepared for cloud provider outages

Check out these testing best practices teams should follow to minimize the impact of cloud provider outages so they don’t catch you by surprise.

Check out these testing best practices teams should follow to minimize the impact of cloud provider outages so they don’t catch you by surprise.

Read more